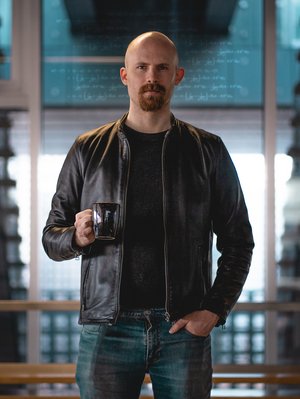

In 2020, Chris Rene Schwiegelshohn started as Tenure Track Assistant Professor in the Algorithms and Data Structures research group at the Department of Computer Science.

At the department Chris will research into the computational challenges of machine learning. In his view, machine learning has recently been one of the most fruitful fields of computer science, while theoretical computer science has trouble keeping up. Many machine learning algorithms achieved tremendous results in various domains. However, our understanding of these algorithms is often poor from a theoretical perspective. Furthering our knowledge in this area is not an idle endeavor of only academic interest; the success of many machine learning algorithms in areas where there would not necessarily be a reason to expect any is intriguing, startling, and perhaps hinting at a deeper connection between computer science and other fields. It is certainly exciting to be part of this development.

Academic background

Chris did his PhD at TU Dortmund, Germany, under the supervision of Christian Sohler. He eventually ended up in Sapienza University, first as a postdoc under the supervision of Stefano Leonardi before becoming a faculty member of the department of computer engineering in 2019. In 2020, he was very happy to join the Department of Computer Science, at Aarhus University.

Chris started his research working mainly on streaming algorithms for machine learning and graph problems. In time, his interests have branched out to approximation algorithms, online algorithms, and classic data structures. He is particularly interested in applications of random projections and sampling for data and dimension reduction. These methods are extremely simple to implement leading to widespread use, while at the same time having an astonishing depth regarding their computational power. Indeed, for almost all problems for which they find applications, their performance is optimal or nearly optimal.

Chris' most important contributions in the field

Chris' most important contributions in the field include the paper

- “On the Local Structure of Stable Clustering Instances”, published at FOCS 2017 with Vincent Cohen-Addad.From a theoretist perspective, clustering is considered a hard problem, a view not shared by practitioners. An emerging field called “beyond worst case analysis” aims at integrating practical model assumptions within a theoretical framework. The aforementioned paper showed that practitioners regard generally clustering as easy because essentially all model assumption are inherently a property on the local optimality of clustering. Since locally optimal solutions are significantly easier to compute than globally optimal ones, it explains the dichotomy between the practical and theoretical view.

Two other important papers are in the field of coresets.

- The 2018 NeurIPs paper “On Coresets with Logistic Regression” coauthored with Alexander Munteanu, Christian Sohler, and David Woodruff. Coresets are succinct summaries that approximate the cost of any candidate solutions up to a small factor. They are frequently used to speed up algorithms, or when stringent memory constraints need to be considered. Obtaining coresets for logistic regression has been a open problem for a number of years. Unfortunately, the aforementioned paper showed that logistic regression admits no coresets in the worst case. On the positive side, the paper identified a natural assumption under which a coreset construction is possible and devised a sampling distribution that outperformed all previously proposed methods in benchmark data sets.

- The other important paper on coresets considers classic k-clustering problems such as k-median and k-means. Arguably, these problems have pushed coreset research the most. Despite best efforts of the research community, previous coreset frameworks exhibited a number of drawbacks, including being limited to spaces of bounded VC dimension, and requiring k² points or more in certain case. In a recent work entitle “A New Coreset Framework for Clustering” accepted at STOC 2021 and coauthored with Vincent Cohen-Addad and David Saulpic, the quadratic dependency on k was replaced with a nearly linear one, which is optimal. The framework also yields improved bounds in all other parameters in all previously studied metrics.